Generative AI: The Technology Behind ChatGPT by Bhusan Chettri

In recent years, Artificial Intelligence (AI) has seen tremendous growth, leading to the development of various AI models with diverse capabilities. One such ground-breaking advancement is Generative AI, a subset of AI that enables machines to generate new data instances like existing ones. One of the most popular applications of Generative AI is ChatGPT, a language model developed by OpenAI an American AI company. In this article, Bhusan Chettri introduces Generative AI by first providing its background, explaining its different types, and further discusses how it underpins the technology behind ChatGPT - one of the most widely used and influential AI applications world-wide.

Background. Generative AI is a branch of Artificial Intelligence that focuses on creating new data instances based on patterns it has learned from existing data. It allows machines to generate creative content, such as images, music, or text, by learning the underlying distribution of the data.

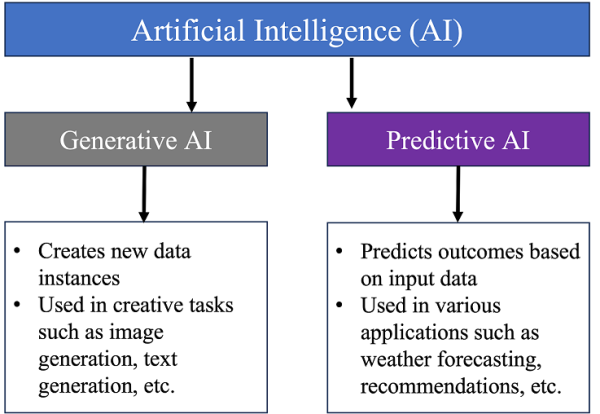

Figure 1: Generative AI and Predictive AI – a schematic overview.

There are primarily two types of AI: Generative AI and Predictive AI as illustrated in Figure 1. While both are subsets of Artificial Intelligence, they have distinct approaches and purposes. As discussed earlier, Generative AI focuses on generating new data instances. It learns from patterns in existing data and uses that knowledge to produce new content that resembles the original data distribution. This type of AI is used in creative tasks, such as image and text generation, and is responsible for the capabilities of language models like ChatGPT. ChatGPT, developed by OpenAI, is a language model powered by Generative AI technology. In other words, it utilizes deep learning techniques to understand natural language and generate human-like responses to text inputs. ChatGPT can engage in conversations, answer questions, and provide useful information, making it an impressive AI-powered chatbot. Predictive AI, on the other hand, is designed to predict specific outcomes based on given input data. It uses historical data to make accurate predictions about future events or trends. This type of AI is widely used in recommendation systems, weather forecasting, and financial analysis, among others.

Generative AI encompasses various models, each with its unique approach to generating data. Some popular types of Generative AI models include Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs). VAEs are generative models that focus on encoding input data into a latent space representation and then decoding it back to generate new data points. They are widely used in image generation tasks. GANs on the other hand consist of two neural networks, a generator, and a discriminator. The generator creates new data instances, while the discriminator tries to distinguish between real and generated data. They have been employed for generating realistic images, videos, and audio.

Transformer. Among these models, the Transformer has emerged as a revolutionary architecture that powers language models like ChatGPT. The Transformer model, introduced by Google researchers in the paper "Attention is All You Need" in 2017, has transformed the field of Natural Language Processing (NLP). Unlike previous models, such as recurrent neural networks (RNNs) or long short-term memory (LSTM) networks, the Transformer employs self-attention mechanisms for parallel processing of sequences, making it highly efficient for handling long-range dependencies. The self-attention mechanism allows the model to weigh the importance of each word in a sentence concerning the other words in the same sentence. This capability enables the Transformer to capture contextual information effectively, making it well-suited for language-related tasks.

Applications. The Transformer model has had a profound impact on various research domains and has proved to be a versatile tool for solving a wide range of problems. Some of its key applications include:

- Natural Language Processing (NLP): The Transformer has revolutionized NLP tasks such as machine translation, text summarization, sentiment analysis, and question-answering systems. Its ability to understand the context and relationships within text has significantly improved the accuracy and fluency of language-based applications.

- Computer Vision: The Transformer has also found applications in computer vision tasks, demonstrating its effectiveness in image recognition, object detection, and image generation. Its parallel processing capabilities make it suitable for handling large-scale image datasets.

- Speech Synthesis: The Transformer model has been employed in speech synthesis tasks, such as text-to-speech systems, where it converts written text into natural-sounding speech.

- Medicine and Healthcare: The Transformer's ability to process and understand textual data has enabled it to contribute to medical research and healthcare applications. It has been utilized for analyzing medical records, extracting relevant information from medical literature, and aiding in medical diagnosis.

“While Generative AI, including the Transformer model, has achieved remarkable advancements, it also faces several challenges and drawbacks” says Bhusan Chettri. Some of the prominent challenges that needs to be addressed are:

1. Data Bias: Generative AI models can inadvertently learn biases present in the training data, leading to biased outputs. This can be a concern, especially when these models are used in decision-making processes.

2. Ethical Considerations: The use of Generative AI raises ethical concerns, particularly when it comes to generating fake content, misinformation, or deepfakes. Ensuring responsible and ethical deployment of such technology is essential.

3. Computational Resources: Training large-scale Generative AI models like the Transformer requires significant computational power and resources, making them less accessible to everyone.

Conclusion. Generative AI, with its ability to create new data instances, has brought about significant advancements in artificial intelligence. ChatGPT, powered by the Transformer model, exemplifies the potential of Generative AI in language processing tasks. From its inception as the "Attention is All You Need" paper to its widespread applications in natural language processing, computer vision, speech synthesis, and many other domains, Generative AI (Transformer in particular) has revolutionized the field of AI. However, as with any powerful technology, Generative AI also poses challenges and ethical considerations. Responsible use and continuous research will be crucial to harness the full potential of Generative AI while addressing its drawbacks. As we move forward, the synergy between Generative AI and other AI branches promises to shape a future where AI systems like ChatGPT become even more proficient and beneficial to society. The next article will take more deeper look into ChatGPT and other latest Generative AI applications. Stay tuned.

Media Contact

Company Name: Borac Solutions

Contact Person: Media Relations

Email: Send Email

City: Gangtok

State: Sikkim

Country: India

Website: https://boracsolutions.com/